Posted At: Mar 03, 2025 - 892 Views

Introduction

Neural networks and deep learning rely on probability functions, weight optimizations, and gradient descent algorithms to improve model predictions. By leveraging mathematical formulations such as logistic regression, backpropagation, and loss minimization techniques, neural networks enhance their ability to classify and predict data effectively.

This study explores key concepts in neural network learning rules, probability functions, and gradient computations, showing their significance in optimizing deep learning models.

🚀 Download the Full Report (PDF): Click Here

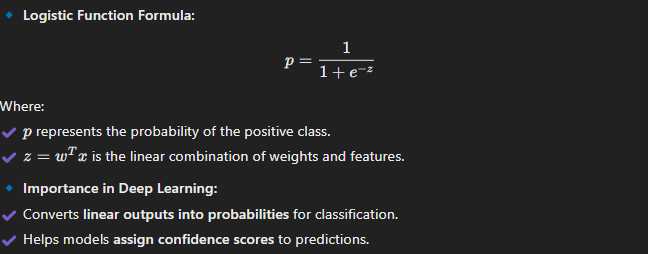

1. Probability Function in Neural Networks

📌 Key Insight: The logistic function transforms real-valued inputs into probabilities between 0 and 1.

💡 Takeaway: The logistic function is crucial for binary classification problems in deep learning and neural networks.

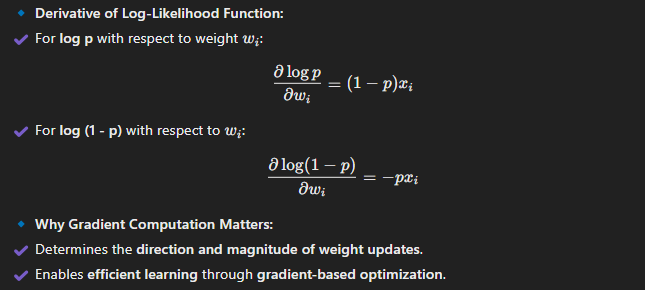

2. Computing Gradients for Weight Optimization

📌 Key Insight: Derivatives help adjust weights by determining how much each weight influences the output.

💡 Takeaway: Gradients drive weight updates in deep learning models, ensuring better predictions over time.

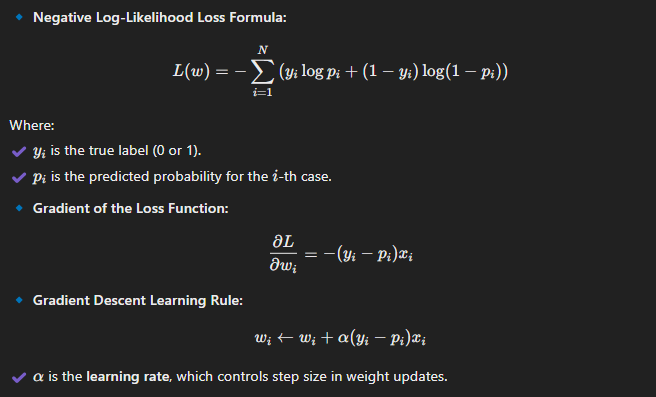

3. Learning Rule for Minimizing Negative Log-Likelihood Loss

📌 Key Insight: The loss function quantifies how well the model performs and guides weight updates.

💡 Takeaway: Loss minimization ensures that neural networks gradually improve accuracy by adjusting weights based on prediction errors.

4. Comparison to Other Machine Learning Learning Rules

📌 Key Insight: Neural network weight updates resemble optimization rules in other machine learning models.

🔹 Gradient Descent in Different Models:

✔ Linear Regression: Adjusts weights based on the difference between actual and predicted values.

✔ Logistic Regression: Uses log probability derivatives for binary classification.

✔ Neural Networks: Applies backpropagation to fine-tune multiple layers.

💡 Strategic Insight: Learning rules across machine learning models share the same goal: minimizing errors and improving prediction accuracy.

5. Practical Applications of Deep Learning Optimization

✔ Image Recognition: Neural networks optimize weights to classify images accurately.

✔ Speech Recognition: Gradient-based models improve audio processing and speech-to-text accuracy.

✔ Financial Forecasting: Deep learning models predict stock trends and risk assessments.

💡 Best Practice: Optimizing learning rules, gradients, and loss functions ensures higher model accuracy and efficiency.

Conclusion

Neural networks and deep learning rely on logistic functions, gradient descent, and loss minimization to refine predictions and enhance model efficiency. The use of log-likelihood loss functions, weight updates, and backpropagation allows AI models to improve accuracy over time.

📥 Download Full Report (PDF): Click Here

Related Machine Learning & AI Resources 📚

🔹 Understanding Backpropagation in Deep Learning

🔹 How Gradient Descent Optimizes Neural Networks

🔹 The Role of Activation Functions in Machine Learning

📌 Need expert guidance on deep learning algorithms? 🚀 Our professional writers at Highlander Writers can assist with AI research, algorithm optimization, and machine learning projects!

Leave a comment

Your email address will not be published. Required fields are marked *